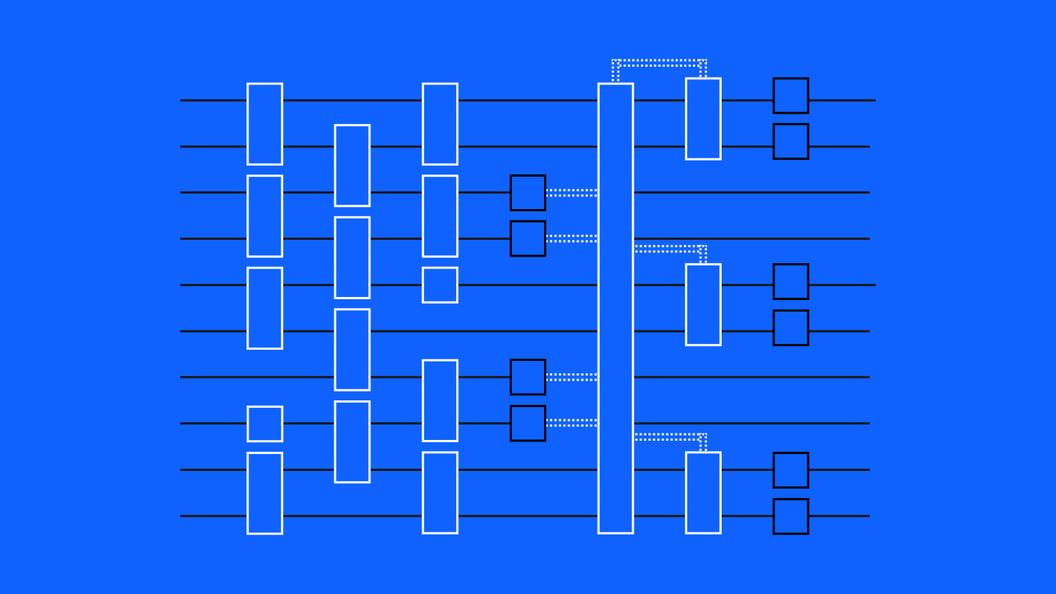

Quantum Volume is a single-number metric that can be measured using a concrete protocol on near-term quantum computers of modest size. The Quantum Volume method quantifies the largest random circuit of equal width and depth that the computer successfully implements. Quantum computing systems with high-fidelity operations, high connectivity, large calibrated gate sets, and circuit rewriting toolchains are expected to have higher Quantum Volumes.

We previously stated that in order to achieve Quantum Advantage in the 2020s, we’d need to double the Quantum Volume of our processors every year. Well, we’re excited to announce that we doubled it for the sixth time in five years with the latest announcement. Most importantly, this improvement joins a drumbeat of improvements across performance attributes as we work along our technology roadmap to bring about Quantum Advantage, sooner.

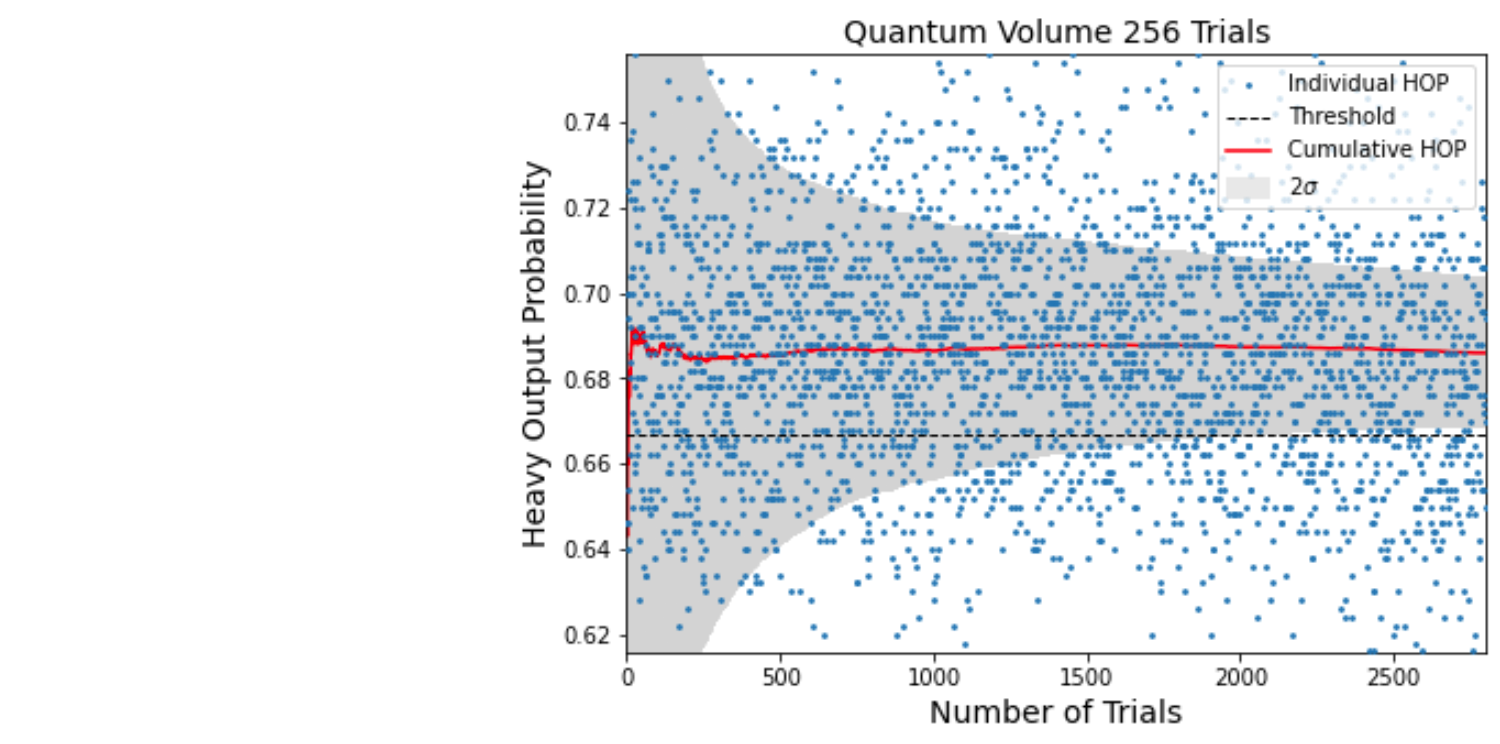

Quantum Volume is a measure of the largest square circuit of random two-qubit gates that a processor can successfully run. We measure success as the processor calculating the heavy outputs — the most likely outputs of the circuit — more than two thirds of the time with a 2σ confidence interval. If a processor can use eight qubits to successfully run a circuit with eight-time steps worth of gates, then we say it has a Quantum Volume of 256 — we raise 2 to the power of the number of qubits (2n)

Note that n from Quantum Volume does not limit you to only n qubits with n time layers as demonstrated with several papers, such as “Error Mitigation for Universal Gates on Encoded Qubits,” published in Physical Review Letters.

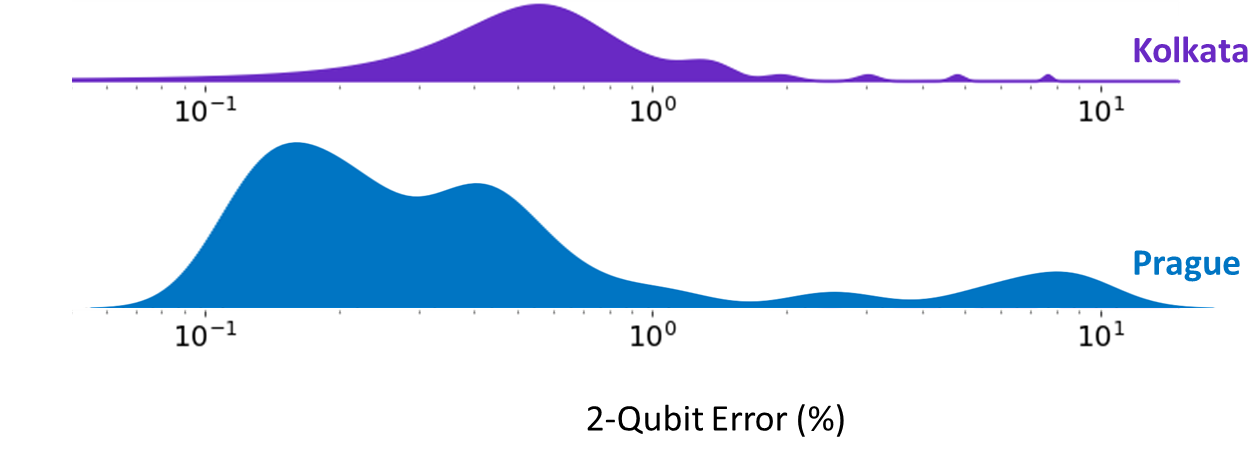

While the last two previous jumps in Quantum Volume have been attributed to improvements in our understanding of how to deal with coherent noise, plus better software and control electronics, reaching 256 was made possible thanks to a new processor which allows us to implement faster, higher-fidelity gates. Key to this advance was finding ways to reduce spectator errors, or those caused by calculations on qubits that are nearby, while still achieving faster two-qubit gates.

This plot demonstrates a decrease in error rates between the Falcon r10 chip in the Prague device used to calculate QV 256 and a previous Falcon r5 chip in the Kolkata device.

Thanks to these advances, we saw the bulk of our two-qubit gates approaching a 99.9% gate fidelity, meaning they would only err one in 1,000 times (though there were some outliers). And, we were still able to see strong coherence times despite performing the experiment on a new processor. When we ran the Quantum Volume experiment on eight qubits for eight time steps of random two-qubit gates, we measured heavy outputs an average of 68.5% of the time with a 2σ confidence high enough to successfully hit 256.

We achieve a higher Quantum Volume when we measure heavy-output probabilities on the Quantum Volume circuit more than two-thirds of the time with a 2σ confidence interval, as demonstrated in this plot.

Scale. Quality. Speed.

Quantum Volume is how we measure the quality of our qubits. but we measure the performance of our quantum processors with three attributes: scale, quality, and speed. We push forward on scale by building larger processors; we measure speed with CLOPS

Driving quantum performance: more qubits, higher Quantum Volume, and now a proper measure of speed with CLOPS.

This announcement comes on the heels of our recent announcement of our largest quantum processor yet: our 127-qubit Eagle processor.

At the 2022 APS March Meetings, we presented an in-depth look into the technologies that allowed the team to scale to 127 qubits, and the benchmarks of the most recent Eagle revision. Read more..

As always, we are following closely along our technology roadmap to constantly improve on all three of these fronts in order to bring about useful quantum computing as soon as we can.