Collocate applications pattern

Collocate Applications with Existing IBM Z Applications and Data, as well as consolidate existing distributed and cloud-native applications for improved performance and sustainability

Overview

← Back to Application modernization patterns

Many organizations rely on existing core applications on IBM Z to run their business-as-usual operations. The Systems-of-Record (SOR) data maintained by these core applications are shared across many other applications supporting different Line of Businesses (LOBs). These supporting applications may reside on the IBM Z platform or alternatively be on distributed systems. Driven by digital transformation, new cloud-native applications are also being developed as a modernization of core applications that access the core business functions and SOR data (see Expose, Extend, Enhance and Refactor modernization patterns).

The applications residing off-platforms (i.e., distributed, or public cloud) could suffer from an order of magnitude higher latency while accessing core functions and SOR data on IBM Z. This will also translate to a lower throughput with a frequent access to core functions and data. The distributed and cloud-based applications also demand the same high level of SLAs (Performance, Scalability, Security, Availability) as supported for the already existing IBM Z-based functions.

Additionally, larger distributed applications with multiple components and/or distributed applications with a higher workload experience poor performance – primarily due to a high or unpredictable network latency across the components of distributed platforms. Hence, consolidation of applications and deployment on fewer, larger platforms can reduce latency and improve performance, irrespective of their needs to access to z/OS based applications and data. There are other potential benefits in managing fewer application components and platforms. This can reduce operational complexity and management overhead (e.g., monitoring, disaster recovery, etc.), improve sustainability metrics, which all contributes to a lower TCO (Total Cost of Ownership).

Solution and pattern for IBM Z®

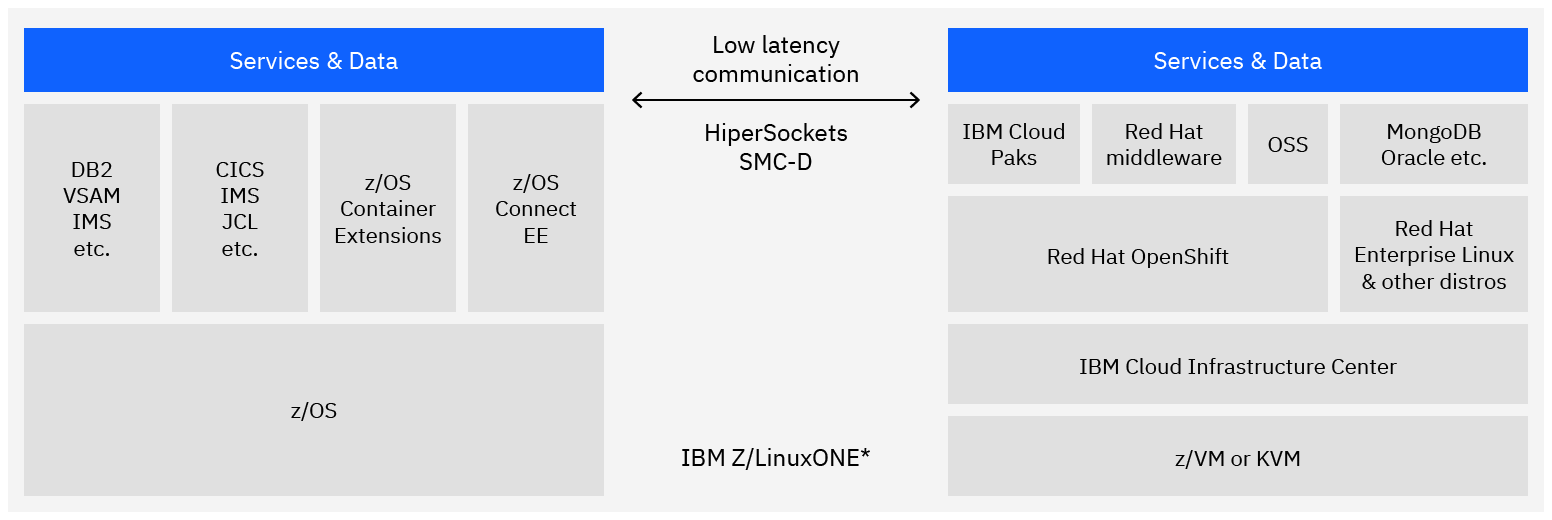

IBM Z provides the runtime environment to run both cloud-native applications as well as for migrating existing distributed applications to IBM Z. Thus, collocating supporting applications on the same platform as core SOR applications can reduce latency across these components (see below). Additionally, IBM Z supports development of new functions as cloud-native applications by providing tools and runtime capabilities for easily extending existing applications to invoke external APIs for cloud-native applications.

(* IBM LinuxONE only supports z/VM, KVM or native LPAR Linux based workloads)

Here are some key capabilities that can be leveraged through collocation:

-

Better scalability: IBM Z provides a highly scalable (both vertically and horizontally, sometimes referred to as multi-dimensional scaling) environment to run existing distributed and cloud-native applications with a higher performance. Vertical scale implies addition of physical capacity (e.g., compute, memory, network, storage etc.) that get applied to the running environment for consumption. This is especially important for monolithic applications where horizontal scale may not be possible. Vertical scale is also valuable for strongly consistent databases (Oracle, DB2 etc.). Vertical scale reduces the need for network hops between components of the topology, improving latency and other performance characteristics. It also improves operational efficiency as there are fewer net components to manage.

-

Lower network latency: IBM Z provides technology like HiperSockets and SMC-D (Shared Memory Communication – Direct), to provide data transfer as the speed of memory. By keeping the inferencing logic on IBM Z or leveraging HiperSockets/SMC-D deployed on Linux (e.g., Red Hat Enterprise Linux, SUSE Linux Enterprise Server or Ubuntu) or Red Hat OpenShift, the provider was able to achieve ultra-low-latency in-transaction fraud detection. With this, the other greenfield services can also be added on IBM Z (natively or via IBM Z Container Extensions (zCX)).

-

Improved security and SLAs: Additionally, some applications can benefit from running on IBM Cloud for not only with a strong support for strict governance and industry-specific compliance requirements, but also providing IBM Cloud Hyper Protect Crypto Services (HPCS) and Hyper Protect Virtual Server (HPVS). HPCS provides cloud data encryption that’s protected by a dedicated cloud hardware security module and enables multicloud key management. HPVS grants you complete authority over your Linux-based virtual servers for workloads that contain sensitive data and business IP.

Here are some workload scenarios that can directly benefit from these capabilities through collocation:

A. Develop and deploy new cloud workloads: IBM Z supports a rich set of modern programming languages like JavaScript (supported by Node.js), Go, Java, Python etc. and AI & ML frameworks that enable core business logic as well as AI based competitive differentiation for organizations like recommendations, fraud detection etc. As these applications typically have tight SLA envelopes and strict governance and compliance requirements, organizations build these greenfield apps on IBM Z/LinuxONE itself versus on distributed systems (on-premises or public cloud). Modern DevSecOps (covered by Enterprise DevSecOps pattern) enables this industry leading time-to-market for businesses innovation.

With multiple deployment options, you choose the right environment for deploying new cloud-based applications whether On-Premises, on IBM Cloud or on public cloud. Low latency in accessing existing applications and SOR data is definitely a key driver, but there are other technical and business drivers that may lead to other choices as well. Please see other patterns and examples of workload scenarios for alternative deployments. Below are examples of workloads that overwhelmingly benefit from collocated deployments.

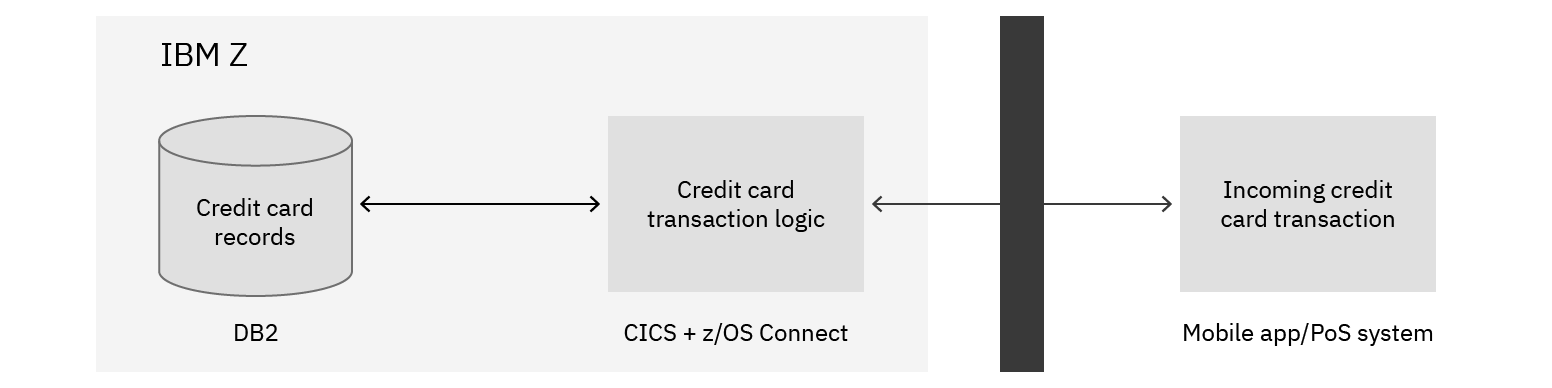

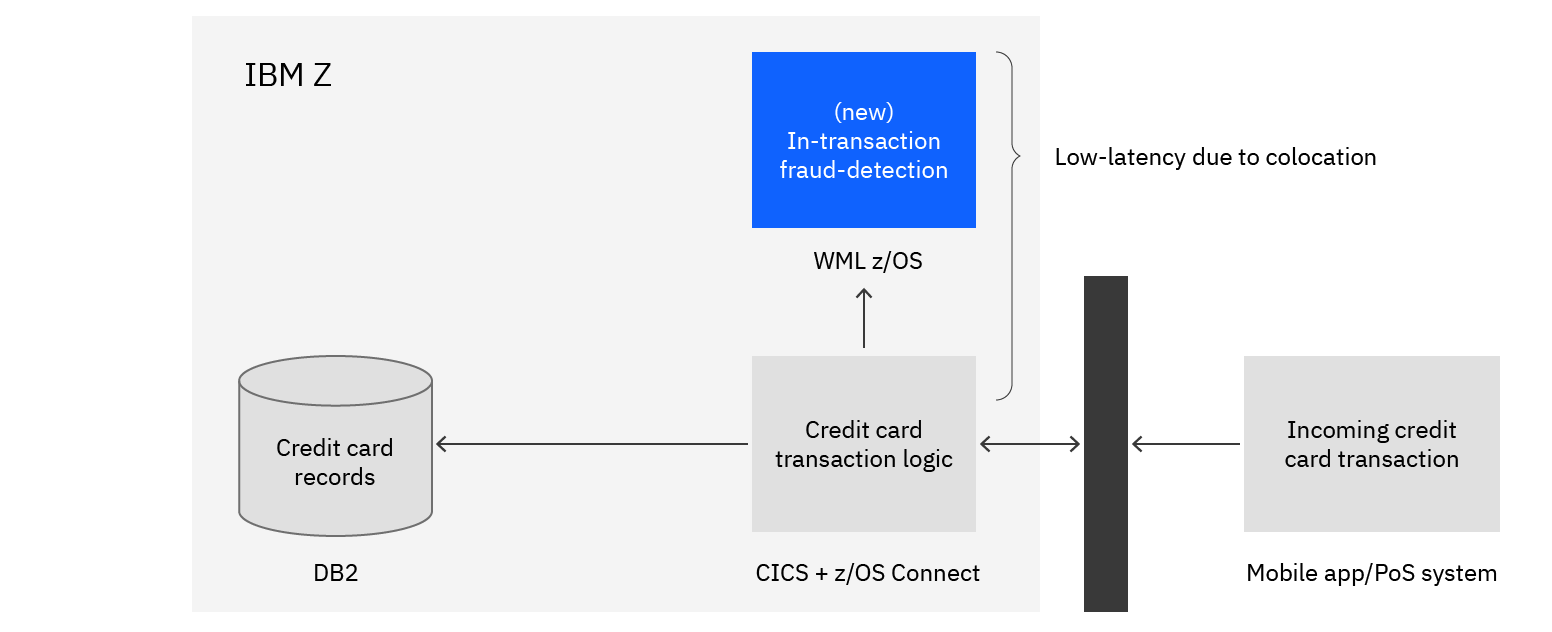

Example 1: A credit card provider uses CICS (COBOL/Java) and DB2 as the system of record. The provider decided to add fraud detection capabilities and wanted to have it in-transaction to catch fraud before it occurred. Deploying this new application logic on any cloud or on distributed systems would incur unpredictable latency that is typically unacceptable for in-flight operations. The existing application logic will invoke this new function using an API (e.g., implementing the Extend pattern). This can take tens if not hundreds of milliseconds, as the API call must go through an API Gateway crossing firewall and deal with unpredictable cloud-based application response time, but also there may be additional delays in accessing Systems-of-Record data residing on IBM Z from this new application logic with additional API calls (see details of architecture in “Deploying a new In-Transaction function to an existing IBM Z application”). By deploying the new “In-transaction fraud-detection” function on IBM zCX minimized the latency in accessing this new service. In addition to lower latency due to optimal network routes, IBM z16 comes equipped with an on-chip AI inference accelerator, which can be leveraged by WML for z/OS, further speeding up fraud detection. The table at the end of this pattern describes various other modes of deployment to meet various operational requirements.

B. Migrate existing cloud applications for efficiency: As part of prior modernization efforts, organizations may have migrated subsets of applications to distributed systems (on-premises or public cloud). Typically, the guiding NFRs that initiated this migration strategy end up failing in practice and execution. While the reason for failure is sometimes cost, the primary reason for failure is the inability to achieve or maintain SLAs – typically due to latency spikes. By collocating these applications closer to the system of record data sources and services on IBM Z, this risk can be mitigated.

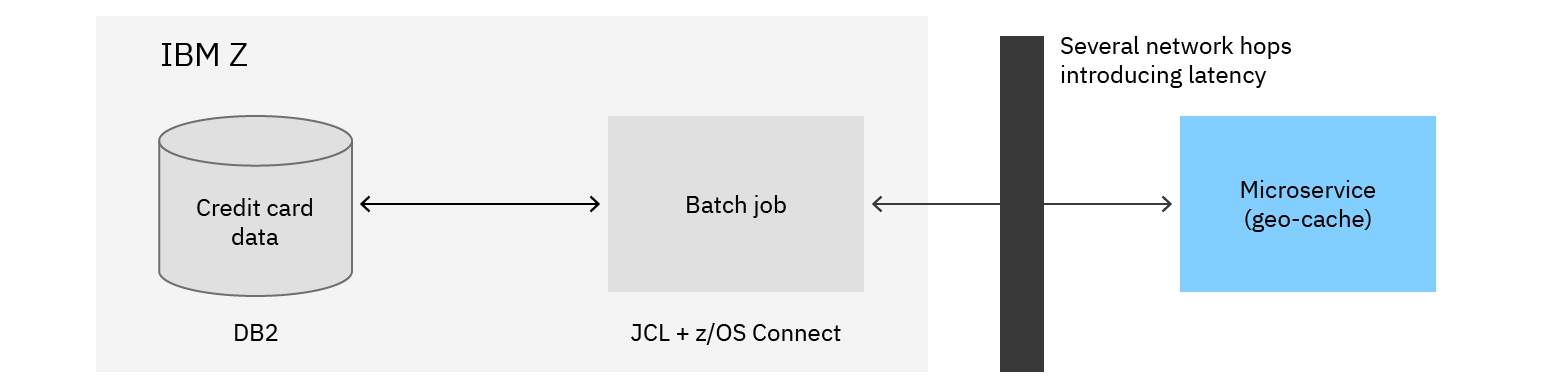

Example 2: An organization performed batch jobs each night and as part of a past modernization effort, migrated a piece of the business logic to Java microservices running on OpenShift on distributed hardware. There was significant latency per due to network hops and this was causing the organization issues with meeting their batch processing windows.

As IBM Z supports Java (with industry-leading Java performance), the team collocated their application on the platform without needing to change the code. Latency was significantly reduced (7-15x reduction) and batch window times were reduced by an order of magnitude.

There is another reason for migrating public cloud applications to IBM Z – to provide an opportunity to offer value-added services to end users that might have been SLA prohibitive otherwise. By collocating the existing app with the system of record, SLA buffers can be increased which allows inclusion of additional, value-added services in the transaction while still adhering to strict SLAs. Another benefit of this is the eradication of the need to perform ETL and replication of data, which leads to out-of-date data etc. while generally increasing MIPS consumption (upwards of 25%!).

Example 3: In this example, the organization has an internal end-to-end transaction latency goal of say, 250 ms on average (with occasional spike to 750ms+) but it has an external SLA requirement of say, 300 ms. While the average latency is below the external SLA, the organization still sees an opportunity for improving the average and peak latencies. By collocating the application with the Data Sources, the average transaction latency is now reduced to say, 150 ms leaving a buffer of 100 ms to provide additional value-added services. This could also lead to a throughput increase (routinely 10x higher seen by clients in field).

In this scenario, the organization additionally decides to provide a service like fraud detection and still be left with a buffer of 20-50ms while still respecting their SLA average and peak windows.

C. Incremental modernization with minimal risk: As part of the move to microservices based containers, organizations are typically faced with a steep learning curve for both monolithic->microservice operations and another learning curve for operating containers in production. With vertical scale and collocation of workload on IBM Z, risk of modernization can be significantly reduced.

Example 4: An organization wanted to modernize their monolithic WebSphere Application Server (WAS) application running on a distributed platform that accessed the IBM Z DB2 database as they wanted to follow an incremental approach to modernization. Their WAS business logic ran on a distributed system, and they saw an opportunity to collocate with their system of record on DB2 to improve throughput. Due to vertical scale of the IBM Z platform along with its industry leading Java performance and large processor caches, the net result was a 40x+ increase in throughput (queries/minute). They re-hosted their traditional WAS application on WAS Liberty without needing to change any code.

Moreover, due to the scale up capabilities of IBM Z, they could containerize their monolith without needing to convert to microservices first then convert to microservices using tools like Transformation Advisor (part of WebSphere Hybrid Edition) and following the Refactor as services pattern. This enabled incremental low risk modernization while enabling the client to gain operational skills with containers first followed by skills to manage microservices.

D. Consolidate/Migrate existing cloud applications for efficiency: As part of prior modernization efforts, organizations may have developed green field applications deployed to distributed systems (on-premises or cloud) or migrated subsets of applications to these platforms. In contrast to distributed systems, where smaller components of distributed applications spread over multiple lower capacity hardware platforms, IBM Z and LinuxONE provide significantly higher compute and I/O density in a small physical footprint. This lends itself to a better vertical and horizontal scalability as well as up-to 75% lower power consumption and reduction in cooling costs. Also, as mentioned earlier, HiperSockets and SMC-D technologies reduce network latency across fewer application components. Thus, consolidation and collocation of application components through Vertical and Horizontal scaling improve not just performance but also TCO. Heritage and cloud native products typically have core-based pricing where higher cores lead to higher software licensing costs which leads to higher TCO. By consolidating workloads, (e.g., a relational database like Oracle or DB2), organizations can benefit from reduced TCO.

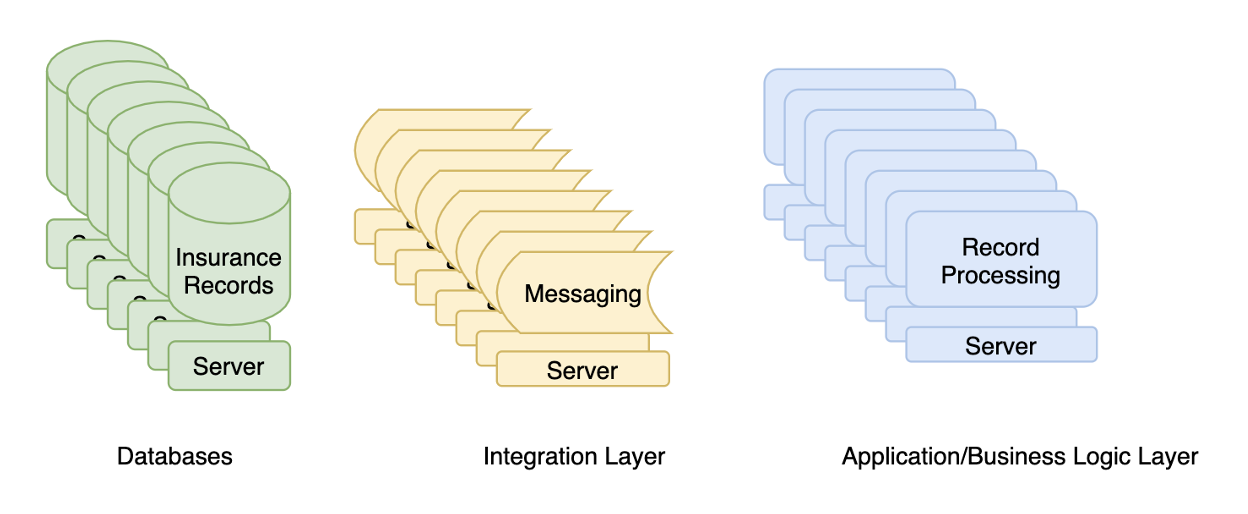

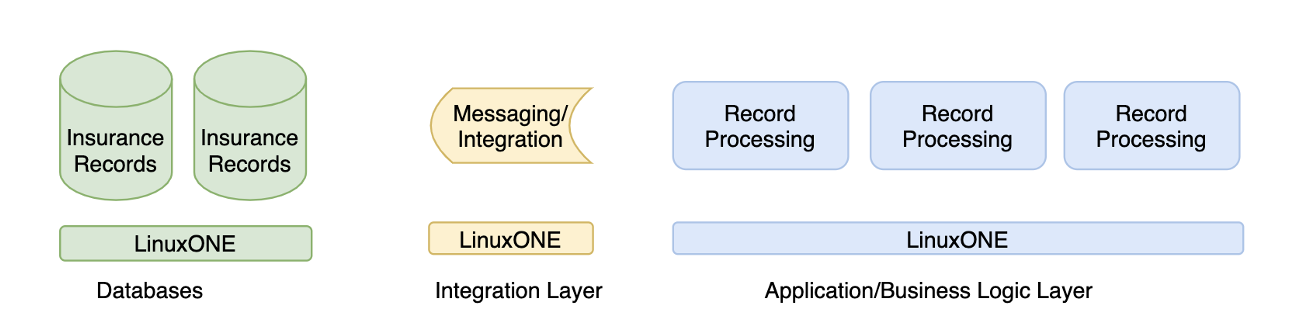

Specifically, if we re-use our existing record processing use-case (Example 4), we can consolidate the data serving, the message serving and application business logic, to reduce the overall cost of ownership. In addition to cost reduction, consolidation also provides a higher performance in scenarios where horizontal scale (or partitioning or sharding in databases), adds unnecessary network hops.

(Figure: Existing insurance recording processing application deployed on distributed systems)

(Figure: Consolidated record processing that benefits from reduced cost of ownership, reduced operational complexity and higher scalability)

(Figure: Collocated and consolidated record processing that benefits from higher performance, reduced cost of ownership and reduced operational complexity and higher scalability)

Advantages

A key business benefit of using this pattern is to reduce latency by an order of magnitude in accessing core applications and data on IBM Z. It reduces latency not only between micro-services but also between micro-services and existing applications and data on IBM Z using ultra low-latency technology. This not only improves performance of the overall solution but has many other direct and/or implied benefits. Overall, the key business benefit of using this pattern is that enterprises do not have to abandon their decades of investment in existing core applications on IBM Z, and instead breathe new life into these applications by extending their capabilities with cloud-native applications. These assets cannot be recreated easily without a huge upfront cost, a significant effort as well as a long delay. The benefits also extend to leveraging investment on existing distributed applications by collocating on the IBM Z Platform.

Cloud-native application advantages: The use of cloud-native technologies can enable organizations to build highly scalable applications in today’s modern environment with private, public and hybrid clouds. Specifically, the ability to use cloud-native applications with existing core applications on z/OS can:

- Enables speed to develop and deploy new capabilities, responding to market demands using modern development tools and processes

- Addresses skills shortages of older technology by leveraging open standards-based languages

- Takes advantage of security frameworks available with modern applications to meet compliance standards

- Avoid the high risks of completely rebuilding business-critical applications

- Provide the ability to deploy updates without redeploying the entire application

- Support agility in developing and deploying new cloud-native microservices-based applications for an overall lower cost hybrid-cloud solution.

- Close-proximity of new applications to existing system of record data to reduce latency, e.g., use of APIs for easy access to business-critical applications and associated data running on IBM Z.

IBM Z platform advantages: In addition to reduced latency, leveraging this pattern and collocating supporting applications closer to core applications on IBM Z will result in the following benefits.

- Reduced Total Cost of Ownership (TCO) as well as a private cloud on a box

- Ensures business continuity with scale up and scale out of workload

- Securing business critical applications and data while providing high availability

- Ensures end-user experience in dealing with dynamic workload surge with Scale up and scale out solution to fit workload needs.

- Address data sensitivity requirements, such as internal policies, government regulations, or industry compliance requirements with a private cloud environment

- A secure end-to-end pipeline from developer to production and zero-trust deployments

- Meet the demands of applications that use services in multiple on-premises secure containers and on multiple clouds.

- Ease of deploying packaged solution

- Access to turn-key solution inclusive of deployment, orchestration, operational tooling

Considerations

Given the innovation on the IBM Z platform, there are several options for application deployment that provide options to meet and maximize non-functional requirements of applications. Some of these options are:

- Native on a Linux distribution (e.g., Red Hat Enterprise Linux (aka RHEL), SUSE Linux Enterprise Server (SLES), Ubuntu with enterprise support as well as Debian, Fedora etc.)

- Within a container runtime (e.g., docker/podman etc.) on Linux

- Within IBM Z Container Extensions environment which enables deployment of Linux based containers in a IBM Z environment.

- Natively in z/OS (e.g., in a CICS region, IMS, USS etc.)

For consolidation of distributed applications, the same criteria can be used to determine placement relative to each other, e.g., a consolidated database would be best utilized by the business logic layer being collocated with it if a specific latency percentile is part of the SLA.

There are several considerations for selection of the right balance of latency reduction via collocation and other factors such as:

- Cost

- Deployment Density

- Ecosystem of software

- Operational Alignment

- Scale

- …and other reasons

This table describes the options above for each of those factors and beyond:

| Location | Description | Pros | Cons |

|---|---|---|---|

| Natively on Linux | Natively on Linux implies running workloads (monolithic or microservice based) natively on Linux distributions like Red Hat Enterprise Linux, SUSE Linux Enterprise Server etc. |

|

|

| Red Hat OpenShift on IBM z/VM® or KVM | Red Hat announced OpenShift Container Platform availability on the IBM Z and LinuxONE platform earlier last year which supports both IBM Z/VM and Red Hat KVM as hypervisors |

|

|

| Containers on z/CX | IBM® z/OS® Container Extensions (IBM zCX) makes it possible to integrate Linux on Z applications with z/OS. Application developers can develop, and data centers can operate popular open-source packages, Linux applications, IBM software, and third-party software together with z/OS applications and data. |

|

|

| Natively on z/OS, z/TPF etc. | Running microservices natively on z/OS implies running Java, COBOL, Node.js, Go etc. applications in CICS, USS, z/TPF etc. |

|

|

Note that all of these options can co-exist with a DevOps pipeline to provide a best-fit deployment model for applications. In certain cases, it might be beneficial to deploy the same application across multiple targets as it might have consumers in those targets that can benefit from colocation. For more information, see the Enterprise DevOps pattern.

What's next

- Review these additional patterns that can directly benefit from this Collocation applications pattern:

- Learn more about:

-

Deploy containers inside z/OS and closer to the z/OS application using the z/OS Container Extensions.

-

Deploy containers on Red Hat OpenShift Container Platform on premises on IBM Z or a distributed platform.

-

Tuning network performance for Red Hat OpenShift on IBM Z for further fine-tuning latency

-

Contributors

Elton DeSouza

IBM Systems IBM

Asit Dan

z Services API Management, Chief Architect, IBM Master Inventor, IBM Systems IBM