White Papers

Abstract

Tivoli Storage Manager for Virtual Environments - Data Protection for VMware provides the ability to backup and restore virtual machine data utilizing SAN-based data movement. There are two different data paths where the SAN-based data movement is possible. The first data path is from the VMware datastore to the vStorage backup server, and the second data path is from the vStorage backup server to the TSM server. For the remainder of this document, these two data paths will be referred to as the transport and the backup data path respectively. This document covers setup considerations for enabling SAN data transfer for both of these data paths. The backup data path uses the Tivoli Storage Manager for Storage Area Networks feature which is referred to as LAN-free backup for the remainder of this document.

Content

- Preparation steps for the vStorage backup server.

- TSM server storage pool configuration.

- TSM server policy configuration.

- Setting up the TSM Storage Agent.

- Setting up LAN-free options for each backup instance.

- Testing the configuration.

The diskpart step below is necessary to prevent the potential for the backup server to damage SAN volumes which are used for raw disk mapping (RDM) virtual disks.

- Use the Windows diskpart.exe program to disable automatic mounting of volumes.

diskpart -> automount disable -> exit - For Windows 2008 systems, use the Windows diskpart.exe command to set the SAN policy to OnlineAll, which will automatically bring newly discovered SAN volumes on-line.

diskpart -> san policy=OnlineAll -> exit - Modify the SAN zone configuration so that the vStorage backup server has visibility to the LUNs hosting the VMwares datastores and all of the tape drives used by the TSM server storage pool which will be the LAN-free backup target. If your vStorage backup server has multiple HBA's, you can separate the VMware disk and tape traffic by placing the HBA ports on the backup server in different zones.

- Install the multi-path driver support package provided by the vendor of the disk subsystem which is used to back your VMware datastores.

- Install the tape device driver required for the specific tape technology which is used in your environment.

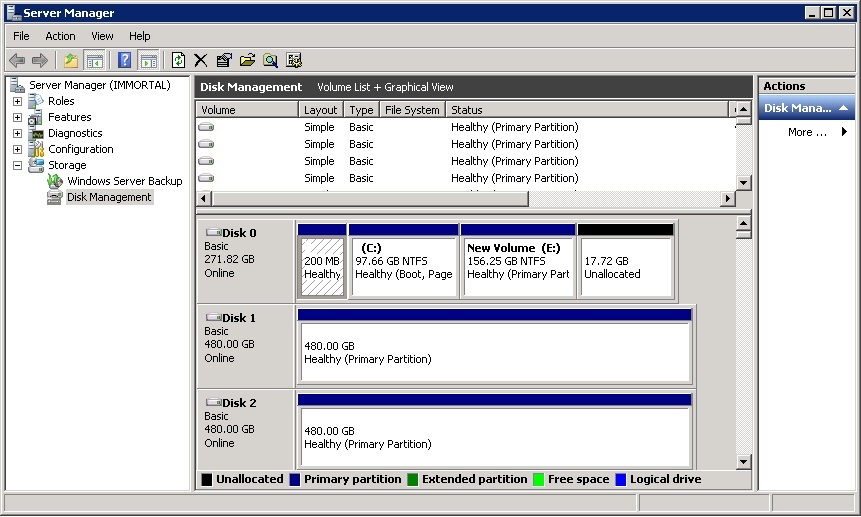

- Determine the tape drive device addresses to be used later using the define path command on the TSM server. (See screen shot below.)

- On the storage subsystem which hosts the LUNs backing the VMware datastores, update the host assignments so that the vStorage backup server has access to the LUNs. For most subsystems, this involves defining a cluster or host group that allows multiple hosts to be assigned to the same group of disks. Many subsystems will give a warning that hosts of different types have been granted access to the same disks.

- Install the TSM Backup-Archive client including the VMware Backup Tools feature.

- Install the TDP for VMware mount interface to enable full VM incremental backups.

Windows 2003:

TSM server storage pool and policy configuration

Two storage pools are required on the TSM server. The first storage pool named VTLPOOL will be the primary container of virtual machine data files. The second pool named VMCTLPOOL will be created for storing control files that are used during full VM incremental backup and virtual machine restore. The amount of space used in each of these storage pools will vary based on the size of the virtual disks.

- Set the TSM server name and password:

set servername scorpio2set serverpassword pass4server - Create a library definition on the TSM server:

define library VTLLIB LIBTYPE=scsi SHARED=yes AUTOLABEL=overwrite RELABELSCRATCH=yes - Define a path from the server to the library:

define path scorpio2 VTLLIB SRCT=server DESTT=library DEVICE=/dev/smc0 online=yes - Define each of the 10 tape drives in the virtual tape library:

define drive VTLLIB drivea ELEMENT=autodetect SERIAL=autodetectdefine drive VTLLIB driveb ELEMENT=autodetect SERIAL=autodetect< ... >define drive VTLLIB drivej ELEMENT=autodetect SERIAL=autodetect - Define paths from the server to each of the 10 tape drives:

define path scorpio2 drivea SRCT=server DESTT=drive LIBR=vtllib DEVICE=/dev/rmt0define path scorpio2 driveb SRCT=server DESTT=drive LIBR=vtllib DEVICE=/dev/rmt1< ... >define path scorpio2 drivej SRCT=server DESTT=drive LIBR=vtllib DEVICE=/dev/rmt9 - Define the device class and storage pool:

define devclass vtl_class DEVTYPE=lto LIBRARY=vtllibdefine stgpool vtlpool vtl_class MAXSCRATCH=100

- Create the file device class:

define devc vmctlfile DEVT=file MOUNTLIMIT=150 MAXCAP=1024m DIR=/tsmfile - Create the storage pool to contain control files:

def stg VMCTLPOOL vmctlfile MAXSCRATCH=200

define domain vmfullbackupdefine pol vmfullbackup policy1define mgmt vmfullbackup policy1 lanfreeassign defmgmt vmfullbackup policy1 lanfreedefine mgmt vmfullbackup policy1 controldefine copy vmfullbackup policy1 lanfree TYPE=backup DEST=vtlpool VERE=3 VERD=1 RETE=30 RETO=10define copy vmfullbackup policy1 control TYPE=backup DEST=vmctlpool VERE=3 VERD=1 RETE=30 RETO=10activate pol vmfullbackup policy1register node zergling password02 DOMAIN=vmfullbackup MAXNUMMP=8 Setting up the TSM Storage Agent

After the initial setup of the vStorage backup server and TSM server have been completed, the next step is to setup the TSM Storage Agent on the vStorage backup server. The storage agent is the component which allows LAN-free data movement between the TSM backup-archive client and the TSM server. The following steps outline the setup procedure.

- Install the TSM storage agent which is available as one of the sub-components in the installation package for the TSM server.

- Setup the required definitions on the TSM server for the storage agent. All of the following commands are entered through the dsmadmc interface:

- Create a server definition on the TSM server for the storage agent:

define server zergling_sta hla=zergling.acme.com lla=1500 serverpa=password01 - Define paths on the TSM server for the storage agent to all of the tape drives. This step requires the device addresses that were collected in the previous section.

define path zergling_sta DRIVEA SRCT=server DESTT=drive LIBR=vtllib device=\\.\tape0define path zergling_sta DRIVEB SRCT=server DESTT=drive LIBR=vtllib device=\\.\tape1< ... >define path zergling_sta DRIVEJ SRCT=server DESTT=drive LIBR=vtllib device=\\.\tape9 - Customize the storage agent's options file named dsmsta.opt which is installed by default in C:\Program Files\tivoli\tsm\storageagent.

DEVCONFIG devconfig.outCOMMMETHOD tcpipTCPPORT 1500COMMMETHOD sharedmemSHMPORT 1512 - Run the setstorageserver command on the storage agent to complete the storage agent configuration.

Note: The storage agent password was set when issuing the define server command, and the TSM server password was set during initial server configuration using the set serverpassword command.> c:> cd \program files\tivoli\tsm\storageagent> dsmsta setstorageserver myname=zergling_sta mypass=password01 myhla=zergling.acme.com servername=scorpio2 serverpass=pass4server hladdress=scorpio2.acme.com lladdress=1500 - Start the storage agent in the foreground to verify it starts correctly. In the startup messages, confirm that both communication protocols initialize successfully, and that the shared library initializes successfully with a message similar to the one shown below.

- Create a service which will run the storage agent to run as a background task. After creating the service, update the service properties from the services management console to allow the service to start automatically at boot. Also, it might be necessary to grant the user ID which is specified the right to run as a service within Windows.

> c:> cd \program files\tivoli\tsm\storageagent> install.exe "TSM StorageAgent1" "e:\program files\tivoli\tsm\storageagent\dstasvc.exe" zergling\administrator secretPW

The example backup-archive client option file shown below includes the required options for one backup instance on the vStorage backup server. The enablelanfree, lanfreecommmethod, and lanfreeshmport options enable LAN-free and identify the communication parameters required to pair with the storage agent. The vmmc and vmctlmc options force the separation of data and control files via the two different management classes previously defined on the TSM server.

> c:> cd \program files\tivoli\tsm\storageagent> install.exe "TSM StorageAgent1" "e:\program files\tivoli\tsm\storageagent\dstasvc.exe" zergling\administrator secretPW Note: The vmvstortransport option is not required to be set since SAN is the preferred transport by default, but is included as an example of how the behavior of falling back to LAN transports can be disabled. The example option is commented out, but if enabled, backups will fail in the event the SAN path is not available rather than switching to a LAN-based transport.

* TSM server communication optionsTCPSERVERADDRESS scorpio2.acme.comTCPP 1500NODENAME zerglingPASSWORDACCESS GENERATE * VMware related options VMCHOST vcenter.acme.comVMCUSER administratorVMCPW ****VMBACKUPTYPE fullVMFULLTYPE vstor * LAN-free optionsenablelanfree yeslanfreecommm sharedmemlanfreeshmport 1512 * Management class control optionsVMMC lanfreeVMCTLMC control * Transport control (optionally uncomment one of the following) * CAUTION: use of non-default settings for the VMVSTORTRANSPORT* option can result in undesirable backup failures. * VMVSTORTRANSPORT san:nbd * prevent the use of NBDSSL * VMVSTORTRANSPORT san * prevent the use of all LAN transports Validate the LAN-free configuration

tsm: SCORPIO2>validate lanfree zergling zergling_staANR0387I Evaluating node ZERGLING using storage agent ZERGLING_STA for LAN-free data movement.Node Storage Operation Mgmt Class Destination LAN-Free ExplanationName Agent Name Name capable?----- -------- --------- ---------- ------------ --------ZERG- ZERGLIN- BACKUP CONTROL VMCTLPOOL No LING G_STA ZERG- ZERGLIN- BACKUP LANFREE VTLPOOL Yes LING G_STAANR1706I Ping for server 'ZERGLING_STA' was able to establish a connection.ANR0388I Node ZERGLING using storage agent ZERGLING_STA has 1 storage poolscapable of LAN-free data movement and 1 storage pools not capable of LAN-free data movement. Run a test backup

After the validate lanfree command has tested out successfully, you are ready to perform some test backups. In the example output below, two points to note have been outlined in red. The first indicates that the SAN transport has been used for the data path between the VMware datastore and the vstorage backup server. The second indicates that LAN-free data movement has been used for the backup data path between the vStorage backup server and the TSM server.

Use the query occupancy command on the TSM server to confirm that backup files have been stored in both of the storage pools that have been configured. If query occupancy does not report this, then something is out of place with the storage pool, management class definitions, or client options. It is normal to see two more files in the control file storage pool than the tapepool for every backup version.

tsm: SCORPIO2>q occ zerglingNode Name Type Filespace FSID Storage Number of Physical Name Pool Name Files Space (MB) ---------- ---- ---------- ----- ---------- --------- ----------ZERGLING Bkup \VMFULL-w- 1 TAPEPOOL 110 12,782.30 in2003x64 - host3ZERGLING Bkup \VMFULL-w- 1 VMCTLPOOL 112 7.77 in2003x64 - host3 Was this topic helpful?

Document Information

Modified date:

19 March 2020

UID

ibm13433611