General Page

IBM Db2 Warehouse in IBM Cloud Pak for Data 3.5 delivers superior performance for warehouse workloads on IBM Power Systems

Author: Theresa Xu

Introduction

This performance report summarizes the test results from running the IBM® Db2® Warehouse BI-Day workload under IBM Cloud Pak® for Data 3.5 in an IBM POWER9™ big core IBM PowerVM® (hypervisor) based Red Hat® OpenShift® Container Platform 4.5 cluster.

Note: BI-Day is the name of the workload used for the test.

The tests were done using IBM General Parallel File System (IBM GPFS) for Network File System (NFS) as the underlying storage for the IBM Cloud Pak for Data cluster located in the Austin IBM lab.

Test environment

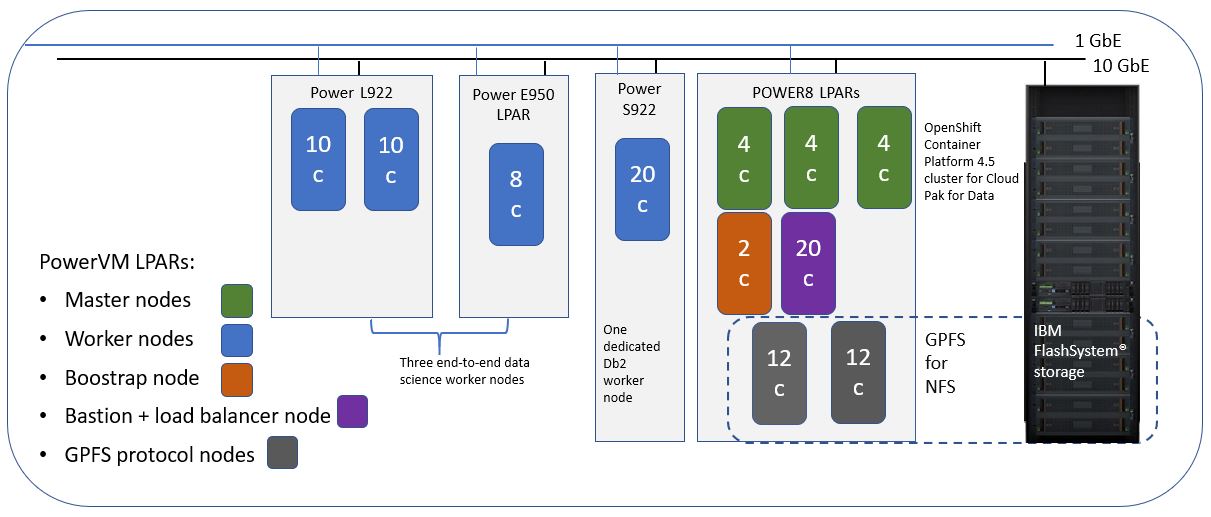

IBM POWER® cluster:

8-node PowerVM cluster – Single Cloud Pak for Data instance: cp4d-cpd-cp4d.apps.cp4d-p.toropsp.com

| 1 bastion node with load balancer | 160 vCPU (SMT 8), 512 GB RAM |

| perfbriggs3 | IBM Power® System S822LC (8001-22C) @ 3.491 GHz |

| Three controllers (masters) | per node:32 vCPUs (8 physical cores with SMT4), 240 GB RAM Total: 96 vCPU, 720 GB RAM |

| master1 | IBM Power E850 (8408-E8E) @ 3.02 GHz (LPAR) |

| master2 | IBM Power E850 (8408-E8E) (@ 3.02 GHz (LPAR) |

| master3 | IBM Power E850 (8408-E8E) @ 3.02 GHz (LPAR) |

| 1 Db2 Warehouse worker node | Per node: 160 vCPUs (SMT 8), 512 GB RAM |

| perfzz17 | Power System S922 @ 2.9 GHz |

| 3 E2E data science worker nodes | 2 Power L922 nodes (per node): 40 vCPUs, 160 GB RAM 1 Power E950 node (per node): 32 vCPUs, 128 GB RAM Total: 112 vCPU, 448 GB RAM |

| NFS (external to the OpenShift Container Platform cluster) | GPFS for NFS, 2 GPFS servers collocating with protocol nodes Per node: 24 vCPU, 496 GB RAM |

| wmlaaplp1 -gpfs server1 | IBM Power E850 (8408-E8E) @ 3.02 GHz (LPAR) |

| wmlaaplp1 -gpfs server2 | IBM Power E850 (8408-E8E) @ 3.02 GHz (LPAR) |

Intel cluster:

11-node KVM Cluster – Single Cloud Pak for Data instance: cp4d-cpd-cp4d.apps.cp4d-x.toropsp.com

| 1 Bastion node with load balancer | 160 vCPU (SMT 8), 512 GB RAM |

| perfbriggs3 | IBM Power System S822LC (8001-22C) @ 3.491 GHz |

| Three controllers (masters) | per node:16 vCPUs (8 physical cores), 64 GB RAM Total: 48 vCPU, 192 GB RAM |

| master1 | Intel® Xeon® E5-2699 @ 2.20 GHz (VM) |

| master2 | Intel Xeon E5-2699 @ 2.20 GHz (VM) |

| master3 | Intel Xeon E5-2699 @ 2.20 GHz (VM) |

| 1 Db2 Warehouse worker node | Per node: 80 vCPUs (40 physical cores with hyperthreading), 512 GB RAM |

| cclake3 | Intel Xeon 6248 @ 2.50 GHz |

| 6 Worker nodes | 4 VMs from Intel Xeon E5-2690 and 2 from Intel Xeon E5- 2699, each with 32 vCPUs (16 physical cores) and 128 GB RAM Total: 192 vCPU, 768 GB RAM |

| NFS (external to the OpenShift Container Platform cluster) | GPFS for NFS, 2 GPFS servers collocating with protocol nodes Per node: 24 vCPU, 496 GB RAM |

| wmlaaplp1 -gpfs server1 | Power System E850 (8408-E8E) @ 3.02 GHz (LPAR) |

| wmlaaplp1 -gpfs server2 | Power System E850 (8408-E8E) @ 3.02 GHz (LPAR) |

The POWER and Intel clusters used the same GPFS for NFS storage servers and the same type of storage backend. Find out what storage I/O requirement Cloud Pak for Data has and how to verify it using the dd command at:

https://www.ibm.com/support/knowledgecenter/en/SSQNUZ_3.5.0/cpd/troubleshoot/performance-io.html

| Cluster node | user-home-pvc | wdp-couchdb | ||

| 1 GB write (> 209 MBps) |

4 KB write (> 2.5 MBps) |

1 GB write (> 209 MBps) |

4 KB write (> 2.5 MBps) |

|

| perfzz17 (Db2 Warehouse) | 933 | 4.8 | 936 | 4.8 |

Workload definition

| Name | Description | Prerequisite |

|---|---|---|

| Analytic flow (Db2 Warehouse) |

TPC-DS like workload with:

|

Db2 Warehouse deployed on one dedicated worker node Raw data size: 1 TB |

Key performance metrics

The throughput of the Db2 Warehouse application in terms of query per hour is used to compare performance on POWER and Intel, plus the percentage of processor utilization on dedicated nodes is used to indicate the compute power required to generate such throughput.

Given that the entire worker node is dedicated to the Db2 Warehouse workload, the key aspect of the measurement is how effectively it can use both the processor and memory resources provided through the deployment GUI.

Cloud Pak for Data 3.5 release result summary

Db2 Warehouse analytics flow

BI-DAY regression flow employs a TPC-DS like workload with 16 concurrent users running 30 heavy queries with a 1 TB data set.

One dedicated worker node is used within the 8-node private POWER cluster with OpenShift Container Platform 4.5 and GPFS for NFS backed by IBM FlashSystem® storage. The Db2 Warehouse worker node (LPAR) is running in SMT 8 mode with a total of 160 virtual cores (vcores) and 512 GB RAM. The Db2 Warehouse deployment GUI is given 159 vcores and 486 GB RAM for both v3.0.1 and v3.5 on POWER.

- Under Cloud Pak for Data version 3.5, DB2 Warehouse has 48% higher workload throughput with 48% higher mean worker CPU utilization compared to version 3.0.1 on POWER9. Compared to OpenShift Container Platform 4.3, OpenShift Container Platform 4.5 cloud framework lowered CPU throttling, thus allowed more efficient use of allocated CPUs. A comparable Intel server also showed CPU utilization increase from 84% (in OpenShift Container Platform 4.3) to 91.3% (in OpenShift Container Platform 4.5). It benefitted less because the x86 processors have only two hardware threads per physical core, and therefore, it was impacted less by the CPU throttling issue.

- Failure rate: 0% in both versions 3.5 and 3.0.1.

- With OpenShift Container Platform 4.5, we have also compared Db2 Warehouse result in Cloud Pak for Data 3.5 to an on-premises deployment with the same workload on the same server. We found that the throughput difference is only 5.1% lower in a private Cloud Pak for Data cluster compared to the traditional on-premises setup.

Notes:

- Cloud Pak for Data used is version 3.5 under OpenShift Container Platform 4.5 with Db2 Warehouse v11.5.5 deployed on a dedicated worker node, while on-premises is running in a LPAR with Db2 Warehouse v11.5.4 (latest GA version during testing period) on RHEL 8.2 at the same kernel level as CoreOS for OCP without containers

- Measurements for POWER9 run on an IBM Power S922 (2x10-core, 2.9 GHz at SMT8, and 512 GB memory) using one NVMe SSD Controller 172Xa/172Xb (778 GB), 10 GbE two-port network adaptor

We also setup a comparable Intel cluster with the same storage and network options. With the Intel cascade server, 40 physical cores with hyperthreading, the maximum number of virtual cores we can give to the workload is 76 while reminder are used by the cloud infrastructure pods on the same node. Compared to Intel, the POWER platform showed consistent and superior (1.42 times or higher) performance advantage when running the BI-Day workload (warm run).

Note: Measurements for the x86 platform run on a two-socket Intel Xeon Gold 6248 (Cascade Lake) server (2x20-core/2.5 GHz/512 GB memory) using one Ultrastar SN200 series NVMe SSD (1.5 TB), 10 GbE two-port network adapter.

Because the POWER9 processor-based system has 20 cores while the Cascade Lake system has 40 cores, the 1.48X system-level performance advantage translates to 2.96X per core advantage, which means 1.71X price performance advantage.

| IBM Power S922 server (20-core 512 GB) | Intel Xeon Gold 6248 based 2-socket server (40-core 512 GB) | |

|---|---|---|

| Server price (in USD) 3-year warranty | 46,989 | 32,683 |

| Solution cost in USD (1 node) server + Cloud Pak for Data + OpenShift Container Platform + Db2 | 82,339 Per node: (46,989 + 35,350) |

95,123 Per node: (32,683 + 62,440) |

| Total queries per hour | 1985 | 1340 |

| Total queries per hour per 1000 US dollars | 24.10 | 14.08 |

Summary

Overall, while comparing Cloud Pak for Data version 3.5 with the previous version 3.0.1, the current version has 48% throughput increase on POWER for the Db2 Warehouse TPC-DS like workload (BI-Day). This is mostly resulted from the OpenShift Container Platform cloud framework enhancements in version 4.5, as well as the Db2 engine’s ability to scale to all available compute resources efficiently.

Disclaimer and notes

Based on IBM internal testing of Db2 Warehouse, a sample analytic workload of 30 distinct queries of varying complexity (intermediate and complex) levels was run. Results are valid as of 20th November 2020 and the tests were conducted under laboratory condition. Individual results can vary based on workload size, use of storage subsystems, other conditions.

Was this topic helpful?

Document Information

Modified date:

10 August 2021

UID

ibm16398268