Kubernetes service types

If a pod needs to communicate with another pod, it needs a way to know the IP address of the other pod. Kubernetes services provide a mechanism for locating other pods.

According to the Kubernetes networking model, pod IPs are ephemeral; if a pod crashes or is deleted and a new pod is created in its place, it most likely receives a new IP address. Kubernetes services allows you to select a mechanism for locating other pods.

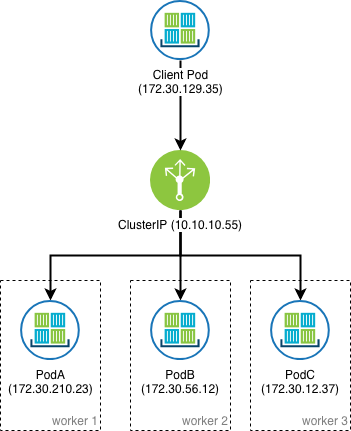

ClusterIP

An internal fixed IP known as a ClusterIP can be created in front of a pod or a replica as necessary. This fixed IP address is drawn from another IP pool, which is specified at IBM® Cloud Private installation time by using the service_cluster_ip_range parameter in config.yaml. It is selected from the RFC1918 private network range as with the network_cidr parameter. The size of this subnet must be chosen in consideration of the number of services expected in the cluster.

Note: It is important that the selected subnet does not conflict with any network resources outside of the cluster that containers might need to communicate with, including the network_cidr parameter, and one or more

subnets that the cluster nodes are on.

The ClusterIP provides a load-balanced IP address. One or more pods that match a label selector can forward traffic to the IP address. The ClusterIP service must define one or more ports to listen on with target ports to forward TCP/UDP

traffic to containers. The IP address that is used for the ClusterIP is not routable outside of the cluster, like the pod IP address is.

Internally, Kubernetes resolves the label selector to a set of pods, and takes the ephemeral Pod IP addresses and generates Endpoints resources that the ClusterIP proxies traffic to. The kube-proxy that is

running on each node is used to configure each worker node. It forwards traffic that is sent to the ClusterIP IP address to a live pod's ephemeral IP address matching the label selector somewhere else in the cluster. The forwarding

rules are updated when new services are created or removed, when pods that match label selectors are started or removed, or when pod liveness changes.

A pod's liveness is determined by a health check that is defined in the yaml for the deployment. This health check can be an HTTP GET that expects a 200 status code, a TCP port to open, or a command to be executed from inside the container that

returns a specific status code. These are defined in the pod's resource definition. Health checks are performed locally in each worker node by the kubelet process and synced with the control plane. If a health check failure threshold

is met, ClusterIP removes the container from the target group.

Two main technologies can be used to implement the forwarding rules: iptables or ipvs

.

-

iptables:

kube-proxycreates and syncs local iptables rules that intercept traffic to theClusterIPon each node, and selects a healthy backend pod at random to forward traffic to. -

ipvs:

kube-proxycreates and syncs ipvs rules that correspond to the Kubernetes services and can apply a load-balancing algorithm when selecting a backend to proxy the traffic to.

Compared with iptables, ipvs has the following advantages:

- High performance for packet processing and inserting new rules

- The ability to build service load balancing

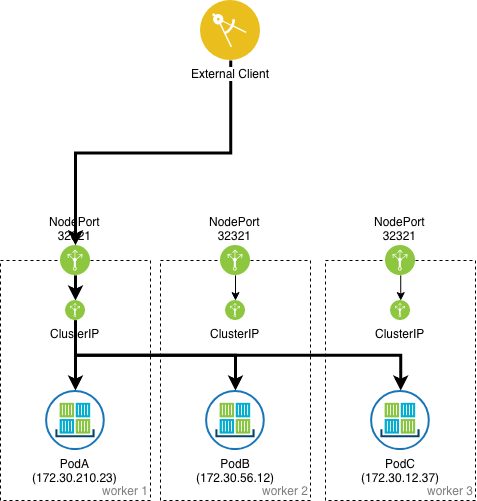

NodePort

Services of type NodePort build on top of ClusterIP type services by exposing the ClusterIP service outside of the cluster on high ports (default 30000-32767). If no port number is specified then Kubernetes automatically

selects a free port. The local kube-proxy is responsible for listening to the port on the node and forwarding client traffic on the NodePort to the ClusterIP.

By default, every node in the cluster listens on this port, including nodes where the pod that matches the label selector does not run. Traffic on such nodes is internally NATed and forwarded to the target pod (Cluster external traffic

policy).

This behavior can be controlled in the Kubernetes service object manifest by setting the .spec.externalTrafficPolicy property to Local, which causes only worker nodes running the pod to listen on the specified NodePort.

This way an extra hop can be avoided and the client's IP address is preserved when it communicates with the pod.

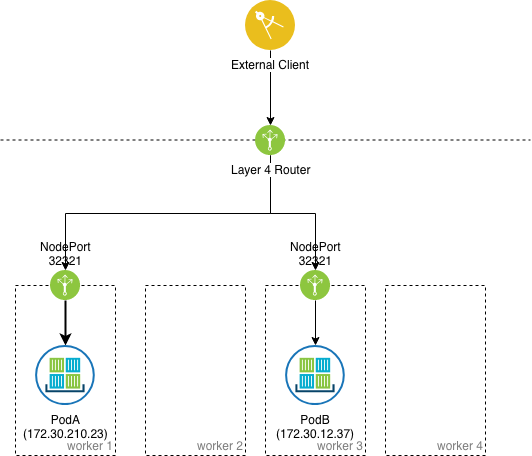

NodePort can be useful when manually configuring external load balancers to forward layer 4 traffic from clients outside of the cluster to a particular set of pods that are running in the Kubernetes cluster. In such cases, the specific port number that is used for NodePort must be set ahead of time, and the external load balancer must be configured to forward traffic to the listening port on all worker nodes. A health check must be configured on the external load balancer to determine which worker nodes are running healthy pods and which aren't.

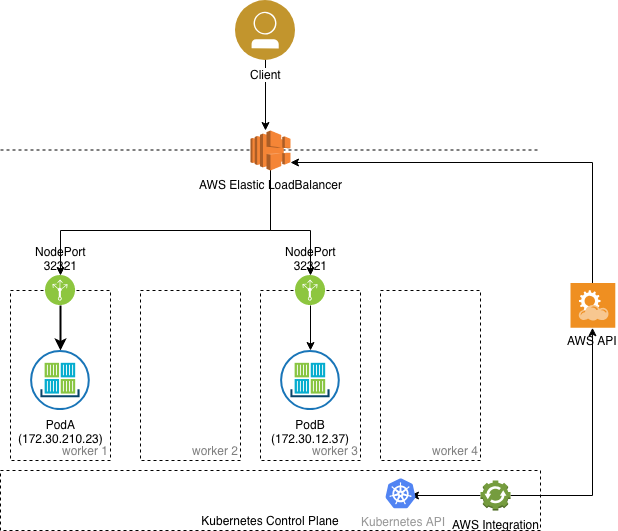

LoadBalancer

The LoadBalancer service type is built on top of NodePort service types by provisioning and configuring external load balancers from public and private cloud providers. It exposes services that are running in the cluster

by forwarding layer 4 traffic to worker nodes. This is a dynamic way of implementing a case that involves external load balancers and NodePort type services. However, it usually requires an integration that runs inside the Kubernetes

cluster that performs a watch on the API for services of type LoadBalancer.