Using ATOMIC blocks

This topic contains examples of how to use ATOMIC blocks.

Before you begin

About this task

DECLARE routingCache SHARED ROW;

CREATE COMPUTE MODULE examples

CREATE FUNCTION Main() RETURNS BOOLEAN

BEGIN

-- Double-check locking pattern: make sure only one thread

-- loads the cache. This is the first check, done without a lock.

IF routingCache.init IS NOT TRUE THEN

CALL LoadCache();

END IF;

-- Find the lookup key in the input message

DECLARE lookupKey CHARACTER;

SET lookupKey = InputRoot.JSON.Data.info.serviceName;

-- Set the destination URL baes on the data retrieved from the cache.

SET OutputLocalEnvironment.Destination.HTTP.RequestURL = routingCache.targets.{lookupKey}.url;

RETURN TRUE;

END;

CREATE PROCEDURE LoadCache()

SharedVariableMutex1: BEGIN ATOMIC READ WRITE

-- Double-check locking pattern: make sure only one thread

-- loads the cache. This is the second check, done under lock.

IF routingCache.init IS NOT TRUE THEN

-- This would normally involve a database lookup, UDP read, etc, but

-- for this example we hard-code the strings.

SET routingCache.targets.first.url = 'https://first.host/example/url';

SET routingCache.targets.second.url = 'https://second.host/example/url';

SET routingCache.init = TRUE;

END IF;

END;

END MODULE;

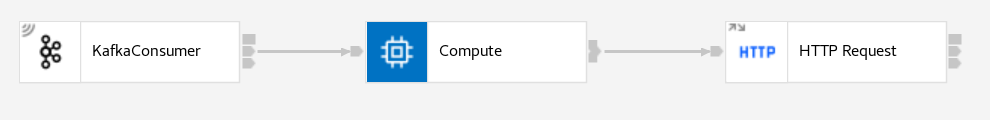

The Compute node first checks to see if the cache is initialized, but it does not need to take a

lock to check this. The init flag will start as NULL (hence the need for IS

NOT TRUE rather than IS FALSE), and even though several threads could both

successfully find the value to be NULL, the second check is done in an ATOMIC block

and would only ever succeed for one thread. In this simplified case the cache could be safely loaded

by multiple threads, so it would be possible to remove the ATOMIC keyword while still seeing correct

(if slightly inefficient) behavior, but in general this is something to be avoided.

In this simplified case, once the cache is loaded there is no more need for ATOMIC blocks; the only other accesses are reading from the cache, which is safe due to the lower-level locking. As a result, the HTTP URL can be set from the cache with a simple assignment, assuming all other parameters are constant (credentials, and so on) for all possible destinations. This single value read simplifies the locking, but is not possible in all scenarios.

DECLARE routingCache SHARED ROW;

CREATE COMPUTE MODULE mqExamples

CREATE FUNCTION Main() RETURNS BOOLEAN

BEGIN

-- Double-check locking pattern: make sure only one thread

-- loads the cache. This is the first check, done without a lock.

IF routingCache.init IS NOT TRUE THEN

CALL LoadCache();

END IF;

-- Find the lookup key in the input message

DECLARE lookupKey CHARACTER;

SET lookupKey = InputRoot.JSON.Data.info.serviceName;

-- Set the destination URL baes on the data retrieved from the cache.

CREATE FIELD OutputLocalEnvironment.Destination.MQ.DestinationData;

DECLARE dataRef REFERENCE TO OutputLocalEnvironment.Destination.MQ.DestinationData;

CALL ReadFromCache(lookupKey, dataRef);

RETURN TRUE;

END;

CREATE PROCEDURE ReadFromCache(IN lookupKey CHARACTER, INOUT dataRef REFERENCE )

RoutingCacheLockLabel: BEGIN ATOMIC READ ONLY -- Must use the same label as the LoadCache() procedure

SET dataRef.queueManagerName = routingCache.targets.{lookupKey}.queueManagerName;

SET dataRef.queueName = routingCache.targets.{lookupKey}.queueName;

END;

CREATE PROCEDURE LoadCache()

RoutingCacheLockLabel: BEGIN ATOMIC READ WRITE

-- Double-check locking pattern: make sure only one thread

-- loads the cache. This is the second check, done under lock.

IF routingCache.init IS NOT TRUE THEN

-- This would normally involve a database lookup, UDP read, etc, but

-- for this example we hard-code the strings.

SET routingCache.targets.first.queueManagerName = 'ONEQM';

SET routingCache.targets.first.queueName = 'ONE.QUEUE';

SET routingCache.targets.second.queueManagerName = 'TWOQM';

SET routingCache.targets.second.queueName = 'TWO.QUEUE';

SET routingCache.init = TRUE;

END IF;

END;

-- Called to reload cache data at various points without stopping the flow

CREATE PROCEDURE ReloadCache()

RoutingCacheLockLabel: BEGIN ATOMIC READ WRITE

-- This would normally involve a database lookup, UDP read, etc, but

-- for this example we hard-code the strings.

SET routingCache.targets.first.queueManagerName = 'OTHERONEQM';

SET routingCache.targets.first.queueName = 'OTHER.ONE.QUEUE';

SET routingCache.targets.second.queueManagerName = 'OTHERTWOQM';

SET routingCache.targets.second.queueName = 'OTHER.TWO.QUEUE';

SET routingCache.init = TRUE;

END;

END MODULE;

In this case, the requirement is to read both values from the cache, and this must be done with an ATOMIC block to ensure that both values are from the same set; reading 'ONEQM' and 'OTHER.ONE.QUEUE' would cause the MQOutput node to fail.

While it would be possible to put the ATOMIC keyword (with READ WRITE in case the cache needed to be loaded) on the whole Compute node Main() function, this would increase the scope of the lock, and it is generally better to take locks only when needed and drop them as soon as possible to enable better scalability.

The use of READ ONLY for reads and READ WRITE only when writing

provides the most scalable solution to shared variable locking. The threads that are reading from

the cache do not block each other when taking read locks, and only when a thread needs to write to

the cache does a write lock need to be taken.