You use Decision Validation Services to execute rulesets on usage scenarios for testing and simulation.

Decision Validation Services let you execute rulesets on usage scenarios for:

- Testing

To validate the accuracy of a ruleset by comparing the results you expect to have with the actual results obtained during execution.

- Simulations

To evaluate the potential impact of changes to rules.

You perform the tests in either the Decision Center Enterprise console or in Rule Designer, which provides added debugging capabilities. Decision Center distinguishes between tests and simulations through Test Suite and Simulation artifacts. In Rule Designer, this distinction is not visible, but you can create key performance indicators (KPIs) for use in Decision Center.

Scenarios

A scenario represents the values of the input parameters of a ruleset, that is, the input data to ruleset execution, plus any expectations on the execution results that you want to test.

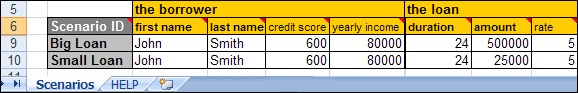

The proposed format for entering your scenarios is Excel. In the Excel format, each scenario is identified by a unique Scenario ID.

In the API, a scenario suite represents a list of scenarios. If you use the proposed Excel format to store your scenarios, your scenario suite becomes an Excel scenario file. These files contain a Scenarios worksheet for the values of the input data.

A scenario named Big Loan, to see how your rules work with a big loan request.

A scenario named Small Loan, to see how your rules work with a small loan request.

Relatively simple and small object models.

Testing that uses up to a few thousand scenarios.

You use a custom format in cases where the scenarios contain complex input or output parameters. You can customize the behavior of the Excel format or create custom formats. The most common example of customization is to access scenarios from a database instead of an Excel file.

Testing

You use testing to verify that a ruleset is correctly designed and written. You do so by comparing the expected results, that is, how you expect the rules to behave, and the actual results obtained when applying rules with the scenarios that you have defined.

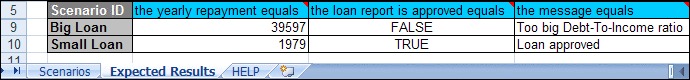

In the Excel format, expected results display in a separate sheet alongside your scenarios. You specify the expected results that you want to test when you generate the Excel scenario file template. The following figure shows the Expected Results sheet for the big and small loan scenarios:

The amount of the yearly repayment.

Whether the loan is approved.

The message generated by the loan request application.

This Expected Results sheet represents these three tests as columns, and you enter the expected results for all the scenarios necessary to cover the validation of your rules.

Similarly, the Expected Execution Details sheet includes more technical tests, for example on the list of rules executed or the duration of the execution. You also specify these tests when generating the Excel scenario file template.

In Rule Designer, you run tests using dedicated launch configurations, as described in Testing rules in Rule Designer.

Simulations

You use simulations to do 'what if' analysis against realistic data to evaluate the potential impact of changes you might want to make to your rules. This data corresponds to your usage scenarios, and often comes from historical databases that contain real customer information.

When you run a simulation, the report that is returned provides some business-relevant interpretation of the results, based on specified key performance indicators (KPIs).

In Rule Designer, you define KPIs and make them available to business users in the Enterprise console, where simulations are run.

Reports

Running tests in Rule Designer sends the specified ruleset, the scenarios, and their expected results and execution details, to the SSP. The SSP returns all the information generated during execution. A report provides a reduced version of this information, for example:

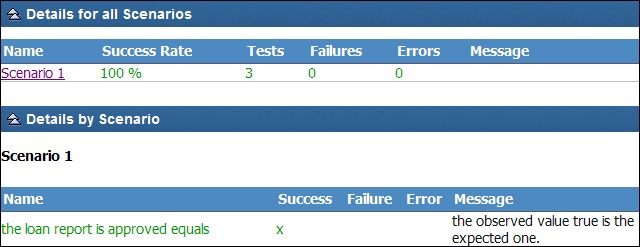

Reports contain a Summary area showing the location of the execution, the precision level, and the success rate, that is, the percentage of scenarios that were executed successfully.

Then, the Details by Scenario area shows the results of each test on each scenario.

A test can have the following results:

- Successful

When the expected results match the actual results.

- Failure

When the expected results are different from the actual results.

- Error

When the scenarios are not executed, for example when an entry in the scenario file is not correctly formatted.

Parallel execution

The time it takes to run a test or simulation depends on the kind of data you use and how many scenarios you have. If you have millions of scenarios to run and you use a class to calculate the key performance indicator (KPI) of these scenarios you may want to look at parallel execution. Parallel execution enables high performance execution.

To execute a simulation in parallel you divide up the scenarios to multiple processors or threads, and then run these simulation cases in parallel. You must implement IlrParallelScenarioProvider, which extends the IlrScenarioProvider interface. An instance of this scenario provider specifies how a simulation is partitioned using a list of scenario suite parts. The provider then uses this list to distribute the simulation cases to multiple parallel processors or threads.

You must also implement an IlrKPIResultAggregator KPI class to compute and consolidate the business key performance indicator from the distributed simulations. For more information, see the ilog.rules.dvs.core and ilog.rules.dvs.ssp API packages.