Known issues and limitations

Platform known issues and limitations are listed in the IBM Software Hub 5.2 documentation.

See the main list of IBM Software Hub known issues in the IBM Software Hub documentation and the known issues for each of the services that are installed on your system.

The following are known issues specific to watsonx.data:

Spark license type not updated after reapplying Software Hub (SWH) entitlement

When the SWH entitlement is removed and then re-applied using the cpd-cli apply entitlement command, the Spark does not automatically update its licenseType field. This can lead to inconsistencies in license recognition within the system.

Workaround: After reapplying the entitlement using the cpd-cli apply entitlement command, manually patch the Spark CR using the following command:

oc patch AnalyticsEngine analyticsengine-sample -n <instance_namespace> --type merge --patch '{"spec": {"wxdLicenseType":"<license_type>"}}'

Postgres primary missing after power outage in watsonx.data

Applies to: 2.2.0 and later

After a data center power outage, users running watsonx.data on Software Hub may encounter a failure in the ibm-lh-postgres-edb cluster where no primary instance is available. In one reported case, one pod (ibm-lh-postgres-edb-3)

was stuck in a switchover state, displaying the message “This is an old primary instance, waiting for the switchover to finish,” while kubectl cnpg status indicated “Primary instance not found.” This caused the entire cluster

to become unresponsive, making watsonx.data unusable.

Workaround: To restore cluster, complete the following steps:

-

Identify a healthy replica - Run the following command to check the status of the Postgres pods:

oc get pods | grep postgresLook for a pod in 1/1 Running state. This indicates a healthy replica.

-

Promote the healthy replica: Run the

cnptool to promote the healthy replica to primary. For example, ifibm-lh-postgres-edb-1is healthy:kubectl cnp promote ibm-lh-postgres-edb ibm-lh-postgres-edb-1 -

Force delete the stuck primary (if promotion is blocked): If the promotion fails due to lingering artifacts from the old primary pod, run the following command to force delete the problematic pod and its PVC.

Note: Ensure your replicas are in 1/1 Running state before proceeding.kubectl delete pod ibm-lh-postgres-edb-3 --grace-period=0 --force kubectl delete pvc ibm-lh-postgres-edb-3 -

Verify cluster recovery: After cleanup, the new primary pod and PVC should come up. Run the following command to confirm the cluster status:

kubectl cnp status ibm-lh-postgres-edb

Unable to create schema under catalogs of type blackhole using Create schema action

Applies to: 2.2.0 and later FIxed in: 2.2.1

User is unable to create schema under catalogs of type blackhole using Create schema action in the Query workspace.

Workaround: Enter the create schema query directly in the Query workspace for the blackhole catalog.

watsonx.data Milvus fails to run a UDI flow

Applies to: 2.2.0 and later FIxed in: 2.2.1

watsonx.data Milvus fails to run a UDI flow created using the default datasift collection.

Workaround: Use a new datasift collection in place of default collection.

Document Library (DL) does not have sparse embedding model information

Applies to: 2.2.0 and later

DL does not provide information about the embedding model used for creating sparse embeddings.

Running concurrency tests against the retrieval service generates TEXT_TO_SQL_EXECUTION_ERROR

Applies to: 2.2.0 and later

The system generates TEXT_TO_SQL_EXECUTION_ERROR when running concurrency tests against the retrieval service with a concurrency number greater than 2, accompanied by the following error details: [{"code":"too_many_requests","message":"SAL0148E: Too many requests. Please wait and retry later."}]

Workaround: Complete the following steps:

-

Run the following command to set

max_parallelism_compute_embeddingsto a higher value insemantic-automationservice. Default value is 2.oc set env -n cpd1-instance deployment/semantic-automation max_parallelism_compute_embeddings=<value> -

Set the value of

number_of_workers_for_embeddingsthat controls the number of workers insemantic-automationservice same asmax_parallelism_compute_embeddingsvalue. -

Provide more cpu/memory to the semantic-embedding pod to align with the increased in

max_parallelism_compute_embeddingsandnumber_of_workers_for_embeddingsvalue.

Or, add more replicas forsemantic-embeddingservice.

Row filtering based on user groups will not be applied when ACLs are ingested from data sources that contains user groups

Applies to: 2.2.0 and later

Issue with ACL ingestion before catalog mapping

Applies to: 2.2.0 and later

If ACL ingestion happens before the user maps the ACL catalogs, the ACLs will be ingested to iceberg_data.

Workaround: To change the storage to another catalog, complete the following steps:

- Add the ACL catalog through the UI.

- Delete the existing ACL schema from the

iceberg_datatable. - Restart the CPG pod.

The system occasionally misses information during entity extraction from the document

Applies to: 2.2.0 and later

There could be some information missing in extraction of the entities from the document. This issue is intermittent and not observed always. When 10 or more data assets are imported, the extraction may miss 1-2 documents.

ETL process does not support currency units normalization for monetary entities

Applies to: 2.2.0 and later

ETL process does not currently support normalizing currency units for monetary entities. This means that if invoices contain amounts in various currencies, the data is extracted as-is without any currency conversion or normalization.

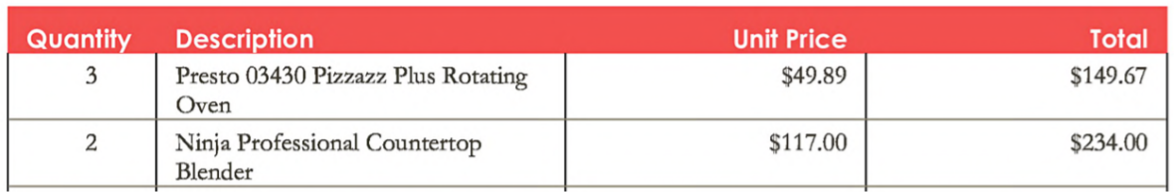

Flat schema for line items may not accurately represent the hierarchical structure of the data

Applies to: 2.2.0 and later

Line items in data assets are extracted using a flat schema, which may not accurately represent the hierarchical structure of the data. Following is an example of the original and the extracted invoice.

Separate Iceberg tables are created in the unstructured ingestion flow instead of appending the new data

Applies to: 2.2.0 and later

In the unstructured ingestion flow, it is noticed that separate Iceberg tables are created instead of appending the new data to the existing table in the same document library and Milvus collection. This might result in incorrect response from the prompt lab. This will be fixed in the GA release.

Chat with Prompt Lab returns inappropriate responses

Applies to: 2.2.0 and later

When the retrieval results are empty, Chat with Prompt Lab may sometimes return inappropriate responses. This will be fixed in the GA release.

Limitations of the Amount and Date columns are in VARCHAR schema

Applies to: 2.2.0 and later

Currently, only the following date formats are accepted:

%b %d, %Y, %m-%d-%Y, %y-%m-%d, %d.%m.%Y, %m.%d.%Y, %d-%M-%Y, %Y-%m-%d

For Amount type columns, the following rules apply:

- The value must contain at least one numerical character.

- The value can have a maximum of one decimal point.

- The value must adhere to the DOUBLE data type constraints.

- If more than 1 value gets extracted inside a single cell, it will be treated as a single value.

- Negative numbers are treated as positive.

The system generates incorrect resuts when multiple document libraries exist in a project

Applies to: 2.2.0 and later

Incorrect results are noticed intermittently when multiple document libraries exist in a project. Therefore, it is recommended to have one document library per project.

Limitations during Text-to-SQL conversion

Applies to: 2.2.0 and later

The following limitations are observed with Text to SQL conversion:

- Semantic matching of the schema - The LLM does not correctly match columns with semantic similarity.

- Wrong dialect for date queries.

Limitation for the SQL execution inside the retrieval service

Applies to: 2.2.0 and later

The SQL execution inside the retrieval service fails when multiple presto connections, catalogs, or schemas are used across multiple document sets in a Document Library.

ACL-enabled data enrichment may fail during ingestion

Applies to: 2.2.0 and later

With ACL enabled, the data enrichment may fail during Ingestion with the following Warning message: computing vectors for asset failed with error. This will be fixed in the upcoming release.

Limitations in Customizable Schema Support in Milvus

Applies to: 2.2.0 and later

Milvus currently imposes the following limitations on customizable schema support:

- Each collection must include at least one vector field.

- A primary key field is required.

Retrieval service behavior: Milvus hybrid search across all vector fields

When multiple vector fields are defined in a collection, Retrieval Service performs a Milvus hybrid search across all of them regardless of their individual relevance to the query.

Ensuring data governance with the document_id field

A document_id field is required to ensure proper data governance and to enable correlation between vector and SQL-based data.

Pipeline extracts incorrect values

Applies to: 2.2.0 and later

The pipeline extracts malformed or irrelevant data, such as including “thru 2025” in the Year field or pulling random text into document_title and name fields.

System merges multiple entities into One column

Applies to: 2.2.0 and later

The system combines distinct entities like GSTIN and State into a single column, reducing data granularity and usability.

Pipeline assigns incorrect data types

Applies to: 2.2.0 and later

The pipeline stores numeric and date fields (for example, deal_size, year) as VARCHAR instead of INT or DATE, which impacts query performance and validation.

System fails to transform values appropriately

Applies to: 2.2.0 and later

The system does not convert shorthand values (for example, 30K to 30000) or standardize currency formats (for example, $39M), affecting data consistency.

Extraction process handles null values inconsistently

Applies to: 2.2.0 and later

The extraction process inserts the string “None” instead of actual null values, which breaks SQL logic and affects data integrity.

SQL Generation Logic Produces Faulty Queries Due to Improper Casting and Schema Confusion

Applies to: 2.2.0 and later

The SQL generation process fails when it uses incorrect casting methods (for example, CAST(REPLACE, ',') without handling symbols like $, and struggles with duplicate columns, resulting in

invalid or unusable queries.

Spark allows application submission with locally uploaded data assets

Applies to: 2.2.1 and later

You can create data assets either by uploading files from a local source, or by importing files from connections within the project. However, Spark applications are only compatible with data assets that are uploaded locally. If a Spark job attempts to use a data asset imported from a connection, the job fails to run.