Deployment architecture

To manage the rule execution environment, you package rulesets into RuleApps and you make the XOM accessible to the rule engine.

If you need simultaneous execution of several rulesets, automatic ruleset updates, or transaction management, you must use a managed rule execution environment.

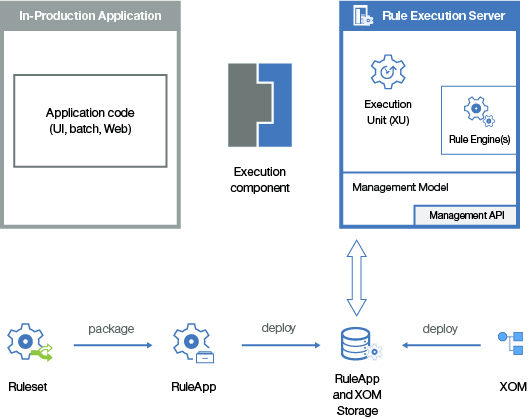

Rule Execution Server is the module for executing rules in a managed environment. You can deploy Rule Execution Server as a centralized service that executes multiple rulesets on the requests of multiple clients. It provides various execution components for developers to integrate rule execution into enterprise applications.

Rule Execution Server is based on a modular architecture that can be deployed and run as a set of Java™ SE-compliant Plain Old Java Objects (POJO) or within a fully Java EE-compliant application server. It provides web-based management through JMX tools, logging, and debugging integration. It packages the rule engine as a Java EE Connector Architecture (JCA) resource adapter. The execution unit (XU) resource adapter implements JCA interactions between the application server and the rule engine.

Choosing the right architecture for managed execution

When choosing the right architecture for managed rule execution, you must decide whether you execute rules on Java SE, on an application server, or as a web service. When defining the software architecture of your application and fitting rule execution into this architecture, you must make some trade-offs. The following table provides some examples:

| Problem | Solution |

|---|---|

| To avoid frequent network usage during rule execution | Instantiate the engine close to the data that it is processing, in as many nodes of the software architecture as you can. |

| To deploy the same decision to more than one application | Take benefit of the independence of transparent decision services and use an architecture that is based on a centralized Service-Oriented Architecture (SOA) |

| For batch processing and guaranteed delivery | Use Java EE Java Message Service (JMS) message-driven bean (MDB) in the client and a rule session within another application. |

| For scalable online transaction systems | Use a clustered Rule Execution Server and remote rule session EJBs for load balancing. |

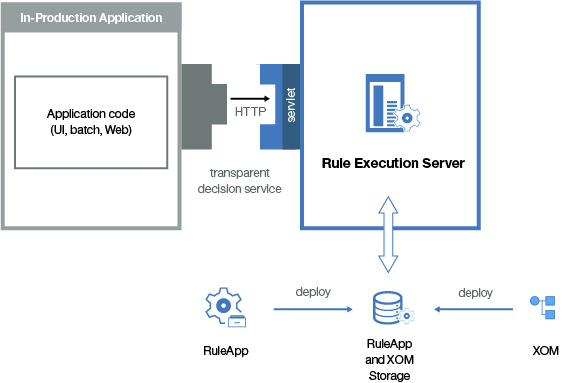

The following figure shows the architecture of a managed application.

Decision Server supports several third-party Business Process Management (BPM) systems and SOA development tools. For more information, go to the IBM support pages.

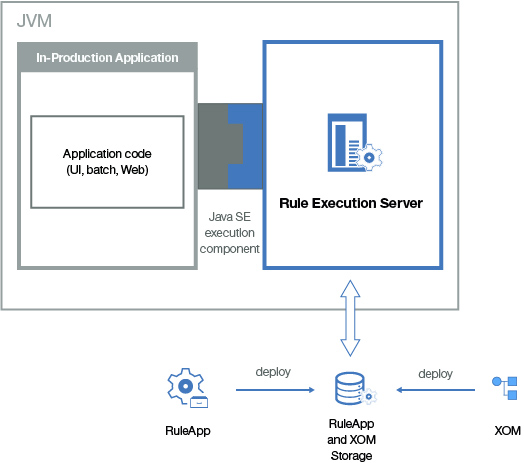

Managing execution in Java SE

When you execute a ruleset in Java SE, you develop an execution component that handles Java SE requests between the application and Rule Execution Server. If your application and Rule Execution Server console do not run on the same Java virtual machine (JVM) or JMX MBean server, you must use the TCP/IP management mode of the console to manage execution unit instances.

The following figure shows how a ruleset is executed in Java SE.

For web-based applications, it is better to have the rule engine in the same JVM as the servlet container to help minimize the need for expensive object serialization between the JVM that hosts the servlet container and the JVM that hosts the rule engine.

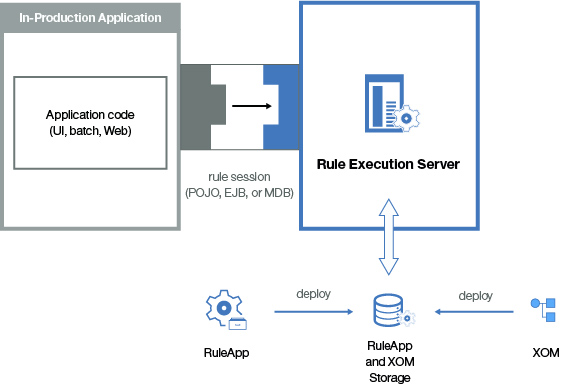

Managing execution in an application server (Java EE)

- Use remote persistence: You can use an EJB or MDB rule session to handle requests between the application and Rule Execution Server.

- Use local persistence: You can use a POJO rule session or the local interface of an EJB.

The following figure shows how to execute a ruleset in Java EE.

Synchronous calls to a decision service from a remote client use the stateful or stateless EJB. Synchronous calls to a decision service from a local client use the local interfaces of the stateful or stateless EJB, or the POJOs.

EJBs are useful for their remote client access capabilities, declarative transactions, and security descriptors. POJOs are useful for their simpler packaging and deployment, and for use outside the EJB container.

Applications that require asynchronous calls to decision services can use the message-driven rule beans (MDB). MDB provide a scalable means to call rulesets where high-latency or high peak-load is expected.

- The number of request threads

- The size of EJB pools

- The size of JCA pools

- The use of native input/output or pure Java input/output

- The pool reclamation policy

- The data replication strategy for clustered deployments

If your application and Rule Execution Server console do not run on the same application server or cluster, you must use the TCP/IP management mode of the console to manage all of the Execution Units (XU).

See the documentation of your application server for specific information related to your environment.

Executing rulesets as a web service

When you expose a ruleset as a web service, your application is typically remote. Requests to Rule Execution Server are carried by the HTTP protocol. You develop a transparent decision service to handle requests between the application and Rule Execution Server. Rule Designer provides tools for generating transparent decision services.

The following figure shows a transparent decision service environment.

- A hosted transparent decision service provided by Decision Server.

- A monitored transparent decision service implemented with the Java API for XML web services (JAX-WS 2.1.1). Use this option if the XOM is Java. For more information, see the JAX-WS RI 2.1.1/ page. (Monitored transparent decision services are deprecated in V8.8.1)

- Controlling access to the implementation rules for a service.

- Providing an audit trail for all implementation changes.

- Reporting at the business level.

- Monitoring rulesets at run time if they implement a transparent decision service.

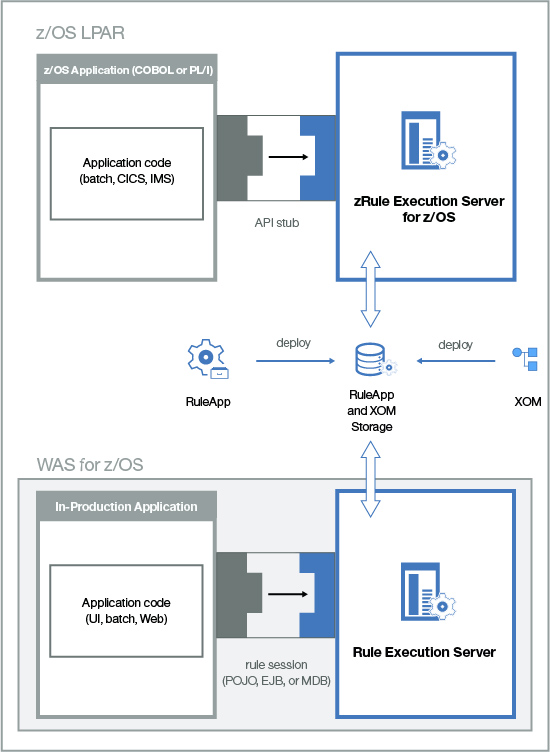

Managing execution on z/OS

- Use Java or z/OS clients (COBOL or PL/I) to call Rule Execution Server on WebSphere® Application Server for z/OS. Use rule sessions in the same way that you use them on a distributed platform. Use WebSphere Application Server with WebSphere Optimized Local Adapters (WOLA) to execute rulesets from a z/OS client.

- Use zRule Execution Server for z/OS to offload rule execution from your z/OS applications (COBOL or PL/I). Rulesets are executed on an instance of Rule Execution Server running in a JVM.

Deployment of the execution artifacts can be to the file system or DB2®.

The following figure shows the execution and management options on z/OS. The persistence layer can be shared between Rule Execution Server and zRule Execution Server for z/OS.